Crossing the Uncanny Valley into Machine Consciousness

As someone who leads teams developing serious artificial intelligence systems every day, I must warn you that this is not that kind of post. The following commentary wanders into the philosophical and speculative, examining the big issue of achieving artificial consciousness. Nothing here is meant to be useful on the job, but it might be fun to think about.

Tech columnists have recently turned to contemplating Artificial General Intelligence (AGI) as they digest the rapid escape of large model AI applications last year. However, AGI is sketchy ground to cover since it lacks a consistent definition. Are we talking about pure subjective consciousness, human level cognition, or a highly generalizable model? Regardless of how we define AGI, the real question is what comes next in the evolution of machine intelligence? More specifically, the question many are silently asking is, are we getting close to machine consciousness and the need to consider ethical treatment for machines?

So, are we close to machine consciousness?

Bluntly, no. Science has not yet scratched the surface in understanding the causal factors that give rise to natural consciousness, let alone machine consciousness. Anyone who claims otherwise is full of B.S.

No irreducible primitives of consciousness have yet been recognized by science. Nobody knows if consciousness is an emergent state that magically appears in integrated information; or if there are discrete computational origins; or if there are quantum-mechanical dependencies; or…ahem…spiritual origins. The machine uprising is a tomorrow problem.

The recent wave of articles contemplating AGI mostly acknowledge that consciousness might be a required ingredient in general intelligence; or possibly, an inevitable consequence. Eitherway, this is a win for consciousness research. Consider that as recently as the 1990’s, before Christof Koch, Stuart Hameroff, Roger Penrose, and other defiant researchers bravely legitimized serious scientific inquiry into consciousness, the study was regarded as ‘fringe’ and pursued mainly by philosophers and Buddhists. One could choose between studying UFOs, Big Foot, or Consciousness with about equal impact to one’s scientific reputation. The field is therefore young and highly underdeveloped compared to other scientific disciplines.

Consciousness is not self-awareness

Unfortunately, most articles covering AGI misrepresent consciousness as a state of ‘self-awareness’. This is a common misrepresentation, particularly among people with conventional secular or religious views. I have found myself debating with more than a few passionate people in pubs, encouraging them to delete the ‘self’ from ‘self-awareness’ when defining consciousness.

As we dive into this discussion, I ask you to consider that consciousness is a more fundamental state than self-awareness. For example, my dog does not need to contemplate self-actualization for her to be a conscious entity capable of subjective experience, she just is. She might not be self-aware, but if someone is mean to her then a moral consequence arises. Many humans are terrible at being self-aware, yet I believe they possess consciousness.

Consciousness is less on the level of self-awareness and more on the level of existence through subjective experience. Can I validate my own existence to myself? Is there such a thing as me? Do my thoughts and experiences matter even when there is no external significance? Does a moral consequence arise when I become the object of pain or harm? Such questions are not asked in a corpuscular sense (of course your body exists and can be harmed), but from an inner subjective sense. From this perspective, we can define consciousness as the emergence of a unified self from a capacity for subjective experience.

Descartes is famously cited for the phrase, “I think, therefore I am”, but in many ways one’s ego is better confirmed by, “I exist because I am aware”, logic that applies equally to my dog as to a person. Buddhists embrace the Principle of Interbeing, which states that “both the subject and object of perception manifest from consciousness”. This principle implies that both the observer and the observed are connected. The experience of you seeing a tree confirms the existence of both you and the tree. If that strikes you as being far too philosophical for science, we can always reframe the general concept in terms of quantum mechanics and the collapse of the wave function. Same paradox, different math.

Encoding

If consciousness arises from, or manifests through, subjective experience, then what physical process causes a subjective experience? How does ordinary matter (dirt) become sentient? The scientific quest for consciousness, as Koch framed the title of his 2004 book, begins with an inquiry into the encoding schemes used to transform neural impulses into phenomenal experiences. For example, blue light is nothing but an arbitrary band in the electromagnetic spectrum, there is nothing ‘blue’ about light in that wavelength (500 nm ±30). Given that fact, what causes the phenomenal experience of blueness? Where does blueness come from?

When the human retina is exposed to light, a bit of neural circuitry generates electric signals in the optic nerve bundle. A similar process occurs in a digital photosensor. However, in both cases the signals do not encode the light’s frequency. Visible light oscillates in the 400 THz to 800 THz band, which is an exotically high frequency, not present in neurons or most electronic circuits. To make a practical implementation, image information is always encoded in summary form, whether from an eye or a digital photosensor.

In the case of a digital photosensor, the encoding is a pixel-level photon count, not the frequency of light. The photon count is only treated as blue if the receptor had a blue filter, thus the count is assigned to a blue channel. In the optic nerve, electrical signals use analog encoding schemes such as ‘center-surround’ encoding. In both cases, nothing in the resulting signal explicitly carries the frequency of the received light. Whatever your mind’s eye experiences from seeing blue is constructed from the encoded signal, not the original source.

Language gives us the label ‘blue’, but the label is arbitrary and unnecessary. One does not need language to fully experience and appreciate that something is blue. Moreover, having the label does not ensure one can experience the color even when subjected (sorry colorblind people).

One starting point common to consciousness research is to probe the neurological mechanisms by which an unremarkable electrical signal in the optic nerve manifests the phenomenal experience of seeing blue.

If we start with how a computer handles this, it needs explicit taxonomies to preserve abstract concepts, like color.

The brain likely employs a substantially different encoding system than a computer. A computer simply quantizes the intensity of each red, green, and blue subpixel to express image information. Does the brain even encode pixel information? Does it use methods of encoding that are intrinsically more meaningful and less dependent on convention? What might those be? Is the encoding itself fully responsible for the phenomenal experience, or is the encoding just a first step in a larger workflow? Does the blue seen by our mind’s eye exist outside the brain as a fundamental construct in nature? If so, does that require new physics to explain?

Artificial Neural Networks (ANNs)

ANNs rely on digital encoding and quantization to represent abstract constructs such as colors. A neural network never sees blue, it only processes number arrays. It is up to engineers to keep values that represent blue separated from values that represent red. Whatever encoding the human brain uses to transform microvolt electrical impulses into an experience of seeing blue is not present in today’s ANN architectures, there is no mind’s eye.

To make the leap from information processing to consciousness, data must acquire non-symbolic meaning. If we assume consciousness arises from neural anatomy, and not some spiritual origin, then the process likely depends on a more elaborate coding scheme than that used by ANNs. When the human brain creates the phenomenal experience of blue, the underlying processes must do more than simply assign a taxonomical label to a tensor. What computational process, if any, produces the subjective experience? Moreover, even if there is something spiritual or quantum mechanical to blame for this, we still have the matter of how the brain’s neural hardware interfaces with that. Regardless, the encoding problem is the gateway to probing consciousness. ANNs are simply not doing any of this.

Holographic Encoding

With Hodgkin-Huxley inspired ANNs dominating AI research in recent years, alternative architectures are getting less attention. At the risk of opening Pandora’s Box, one alternative encoding scheme that has been suggested for decades is holographic memory (distinct from holonomic brain theory).

A hologram differs from a conventional photograph in several ways. To create a photograph, one uses a lens to direct rays of light from points in a scene to pixels on a sheet of photosensitive film, or a photosensor, in such a way that each pixel only receives light from a corresponding part of the scene. Pixels are essentially directional photon counters. If you tear away half of a photograph, you get half of the scene. With a hologram there is no focusing lens, every pixel is exposed to the entire scene, not to directional rays. The higher the pixel density, the better it records details. If you tear away half of a hologram, the entire scene is still encoded in the remaining portion, but at lower resolution. A holographic pixel records raw photon counts but as sampled from the entire scene that is visible from that pixel’s position. The incoming light arrives from every point in the scene at once. Each pixel therefore records the resulting interference pattern, usually against a coherent reference beam. Neighboring pixels record a slightly different interference pattern, and so on. Every holographic pixel records some information from the entirety of the scene. This gives holograms a certain superpower to encode enough information to reconstruct the scene from any viewing angle. Holograms are robust against damage and can suffer large numbers of dead pixels without losing the general features of the scene. Moreover, the scene is encoded in the frequency domain, which is a fundamentally different storage paradigm.

Holographic memory is a concept based on a similar principle. Instead of quantizing individual datum or image pixels, the memory system processes frequency domain interference patterns. In the same way that a hologram records a complex scene from many perspectives, a holographic memory cell might have the capacity to capture a world model that encodes many perceived but related domains together (sight, smell, language, etc.) I use the word ‘might’ because holographic memory has not been fully explored from a practical engineering perspective, certainly not to the extent other architectures have.

It is conceivable (but not proven) that the subjective experience of consciousness depends on a radically non-taxonomical encoding system to capture thoughts, ideas, and abstract concepts in ways that an attention system can experience, not just process. Holographic encoding might be such a scheme, which is why I offer it here as an example. However, there is nothing uniquely promising about holographic encoding over competing ideas, though it is a radical departure from conventional memory or even autoencoder embeddings. It is worth pointing out that researchers who study the elementary computations of single neurons have found evidence that dendritic trees (anatomical structures projecting from a biological neuron) can perform operations similar to a Fourier Transform. This implies frequency domain processing at the sub-cellular level in biological neural anatomy. Hence, holographic memory might not be totally farfetched.

An Encode-Experience Cycle

The primary characteristic of consciousness is the ability to produce a subjective experience. Such an experience can arise from external stimuli, like viewing a sunset or getting poked with a stick. It can also arise from hallucinated experiences that are purely internal. A sunset experienced in a dream and a sunset experienced in the real-world are no different from a phenomenal consciousness perspective.

Whether real or imaginary, there is an encoding step followed by an experience step, which sometimes produces new encodings. For those familiar with a Von Neumann computer, this loop might seem like an analog to the fetch-execute cycle. However, biological brains do not execute sequential program instructions like a computer. The brain likely operates some different form of cycle primitive, we just don’t know what. Maybe an encode-experience cycle?

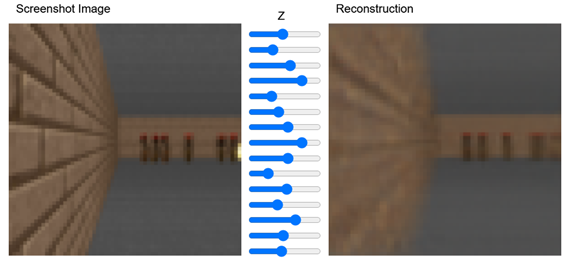

In AI, we have a concept called a Recurrent World Model. Certain types of neural networks can learn an environment and then be made to hallucinate that environment in ways that are eerily dream-like. An AI’s dreams can sometimes be quite realistic in how they adhere to learned physics and environmental constraints. For example, an AI that learns the world of a 3D shooter videogame can render gameplay that is surprisingly realistic, though blurry and dream-like.

Source 1: https://worldmodels.github.io/

One might contemplate an encode-experience loop built around Recurrent World Models as a possible steppingstone to consciousness. However, that is unlikely to work since there is no inherent meaning in the underlying numeric constructs, i.e., autoencoder embeddings, that generate a World Model, nor is there meaning in the resulting numerical data that gets rendered as imagery. Under the hood, the computer is simply transforming small low-dimensional tensors into meaningless higher-dimensional tensors. The brain likely encodes information with more intrinsic meaning. While an AI and a brain both ‘dream’, the AI lacks any form of subjective experience of that dream (a mind’s eye).

Irreducible Primitives of Consciousness

To deeply explore machine consciousness, it is helpful to consider the concept of computational primitives. For example, inside a computer, the primitives are binary NAND and NOR logic gates. Everything a computer does is reduced to such primitive operations. From the most complex programming language to billion parameter AI models to basic arithmetic, it all reduces to sequences of logic gates. Everything a computer does is purely algorithmic.

For phenomenal consciousness, science has yet to discover such irreducible primitives. Might we discover computational primitives for consciousness that arise from logical or stochastic states? Maybe. Or maybe the primitives relate to the collapse of the quantum wave function? In truth, nobody knows. At the highest level, there are three schools of thought on this:

-

- Some believe an algorithmic process can yield a subjective experience, but science has yet to discover such an algorithm. Many in this school search for neurocomputational correlations to consciousness. Some of the best empirical research has been executed in this area, but without making any progress toward answering the main question.

- Some believe consciousness is naturally emergent from integrated information at all scales, and that neurological systems simply integrate information with greater density than ordinary matter. This is a popular theory favored by those with an enormous capacity for faith but no religion.

- Others see no possible way for a conventional algorithm to produce a truly subjective experience and no evidence whatsoever of consciousness emerging from integrated information. These people believe consciousness is non-computational. Some in this camp turn to religion and spirituality while others, like Penrose, believe we must discover new physics to reveal the primitives.

In full disclosure, I am solidly in camp #3. I see no way an algorithm can create a subjective experience. For my liking, Integrated Information Theory is far too dependent on magic. However, all three remain valid hypotheses and this is the open question facing our generation.

In terms of #3, it is interesting to contemplate the possibility that quantum mechanics might play an important role in phenomenal consciousness. A connection to the collapse of the wave function, superposition, and non-locality might explain why every attempt to measure or dissect consciousness (literally and figuratively) has failed. Most serious researchers immediately dismiss quantum consciousness by pointing out the lack of evidence linking quantum states to consciousness. While an increasing body of evidence supports the existence of quantum biology, there are few definitive links to consciousness. This is fine because there are also few definitive links to consciousness from any other theory, scientists just forget to mention that. From this perspective, quantum primitives are as valid, and simultaneously invalid, as other theories.

Outside of religion, the leading theory of consciousness, by fashion, is Integrated Information Theory (IIT). This theory states (paraphrasing) that consciousness is an inevitable emergent characteristic of integrated information, from the scale of atoms to the scale of galaxies. IIT is a fine theory, but scientists should be honest about it being totally made-up science. There is zero evidence that integrated information magically produces a subjective experience. To be fair, every current theory of consciousness includes a magic happens here box on its block diagram, but IIT takes this deep into the valley of fantasy and faith, using fashion to sustain it.

The least fashionable theory is quantum consciousness. However, there is some empirical support from recent progress made in understanding how anesthesia switches consciousness on and off and in the origins of brainwaves. It might come as a surprise to anyone who had a medical procedure involving general anesthesia that, until recently, science had no clue how and why these medications work. Doctors have long known the dosages and effects, but the mode of suppression remained a mystery. Long-time leading theories of synaptic inhibition were built on weak experimental evidence and ‘magic happens here’ reasoning. Strengthening evidence now points to quantum mechanical states as the principal mechanism. While this is not a direct link to quantum consciousness, it is one step closer than science fiction and magic boxes. Given our inability to penetrate the problem of subjective experience through conventional probing, it seems like quantum consciousness is worth exploring.

Single Neurons

Whatever process gives rise to consciousness, one thing is certain – real neurons are complex machines. This is not true for ANNs. Artificial neurons are toy algorithms that perform an insanely simple stochastic function using input weights and a bias to decide whether to activate or remain passive. Activation is on or off, with the activation signal traveling to downstream connections which repeat the process. This simplicity might seem efficient but likely contributes to the enormous inputs of wattage and data needed to train ANNs to be useful. As popular culture eagerly anticipates the emergence of AGI, large models are already constrained by the economics and data hunger of ANNs. Without a better artificial neuron, the inefficiency will snowball and stall our progress toward AGI relatively soon (in truth – it is already stalling progress, but throwing money at the problem has kept things moving).

There are three areas where single neuron complexity is a factor:

-

- The activation function (activation potential). Real neurons are complex mini machines with multiple uncatalogued modes that drive activations. There is far more going on inside a cellular neuron (or in some cases the cell’s membrane) than a few learned weights and a bias with a threshold function. Many cell species implement hereditary macro functionality while also contributing to behavioral adaptation. The role single cell computation plays in encoding and decoding information that ultimately forms a subjective experience is unknown. There might also be quantum vibrations and other novel physics occurring in microtubule structures in neural membranes. None of this complexity is well understood or modeled in today’s AI systems.

- Dendritic Computation. A lot happens to signals from other cells as they propagate the branches of a dendritic tree toward the cell body (more than a hidden weight parameter). Current evidence suggests the presence of a significant degree of dendritic computation (ranging from arithmetic to Fourier transforms). For what purpose and to what end is this complexity? Nobody knows. Dendrites are like the root system of a plant, projecting to targets near and far. Biological neurons are far more connected with layers of computation at the single cell level than artificial neurons.

- Specialization of neuron species. In the human brain there are thousands of neuron species. The differences are not confined to the level of relu, tanh, sigmoid, elu, gelu, softplus, swish, as with ANN architectures. Cells of different species perform widely different functions, connect differently, and have different modes of operation. Why is so much specialization needed? What does it accomplish? For example, pyramidal neurons are only found in certain high-level brain regions and may have a greater tendency to host quantum vibrations – does this matter?

ANNs are not constructed with this level of complexity at the cellular and sub-cellular level. Does that mean ANNs cannot develop consciousness or have a subjective experience? Probably. These high levels of complexity exist in biology for a reason. We just don’t know what the reason is.

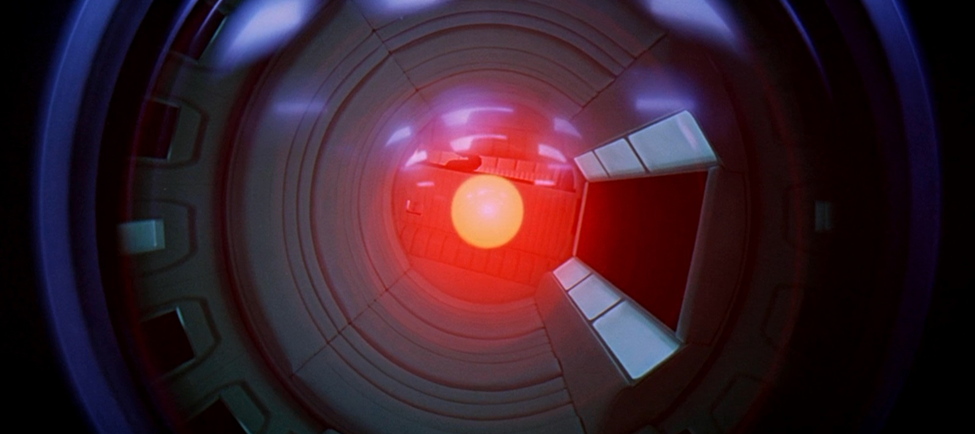

HAL 9000

I wrote this on the fictional 31st anniversary of H.A.L., an artificial consciousness from Arthur C. Clarke’s masterpiece, 2001 A Space Odyssey. It is fun to contemplate whether HAL could exist and how that might happen.

Modern GPUs have turned the great probability machine into something that resembles intelligence but is not. Most of what AI does today is imitation of intelligence, not intelligence itself. There are some exceptions, particularly in deep reinforcement learning (RL). Deep RL systems use repetitive purposeful practice to acquire advanced skills, where the trained agent can plan and execute in ways that rank as intelligent by most measures. However, it would be incorrect to assert that such systems enter a conscious state with subjective experience. Machine consciousness has not arrived and will not arrive in current architectures. Consciousness is almost certainly not computational in origin (though that remains to be proven), meaning that computational systems will likely not achieve consciousness.

The big question is how long until machine consciousness does arrive? I think the best way to answer that is: It will arrive immediately after we discover/understand the irreducible primitives that cause a subjective experience. This is not likely to happen in the next 5-10 years. It might not happen in 50 years. It might not ever happen. To get there, we need more research into the complex modes of single neurons. Research should also explore any relationships between quantum states and neuron function, either to rule those influences out or in.

I predict we are one major scientific discovery away from finding the irreducible primitives of consciousness. I don’t think we need several discoveries, just one big one. However, it might be the biggest discovery in the history of science – so we have no reason to expect it on a timetable. It could happen in years, decades, or centuries. It is likely this is not a breakthrough in cognitive science, machine learning, or computer engineering, but rather a breakthrough in fundamental physics.

Thanks for staying to the end, I hope it was interesting.